Blog: Simulating Real World Codebases and Thwarting Gen AI - R.W.'s Blog 🏳️⚧️

Written March 11th, 2025

(Maybe "Thwarting" is a bit click-baity)

About Shopazon

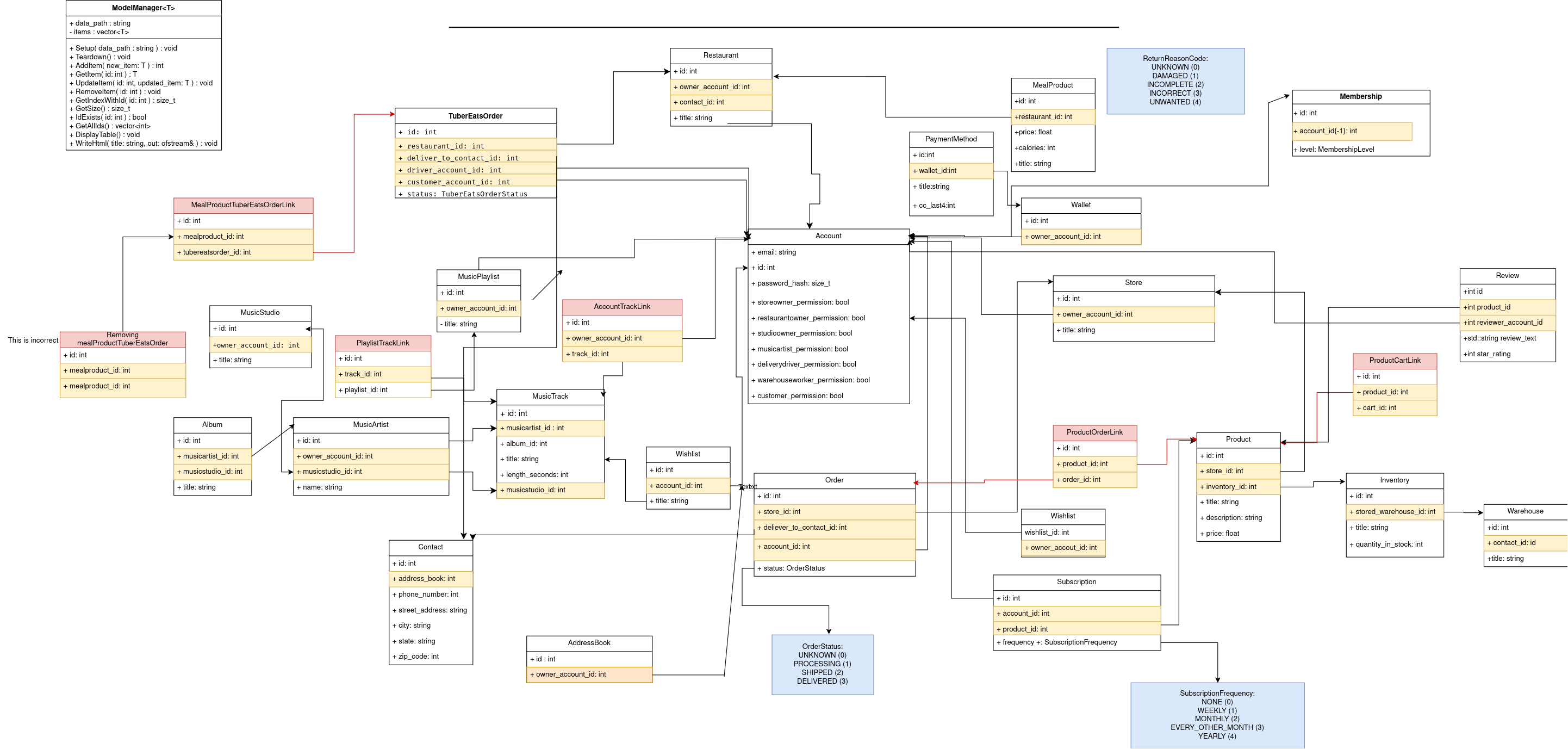

As of Spring 2024 I've had my CS 235: Object Oriented Programming with C++ students work with a mock codebase called Shopazon, modeled after features that Amazon and its products offer, as well as some other common apps in use. This includes Accounts, Stores, Products, Inventory, Warehouse, for a shopping side, MusicArtist, MusicStudio, Playlist, Albums for the music streaming side, and even some models to immitate food delivery services.

In Fall 2024 I had students build out a model diagram, so you can view that here:

This shared codebase was where students would work over four sprints, where each iteration was one Project in the class. This is modeled after professional software development where developers commonly work in sprints, usually about 2 to 4 week increments.

I would create a pool of similar tickets for each iteration - e.g., first sprint is usually some kind of minor bug fix. Subsequent sprints might include adding unit tests, adding manager classes, hooking up functionality in the "front-end" (just a command line program). These features tie back to some topic we've covered in class: Static members, Polymorphism, Testing, Overloading, etc.

Each student assigns themself a ticket out of the pool and creates a merge request with their changes. As part of the project assignment they're also required to review someone else's merge request and also submit their postmortem (What went well? What didn't go well? What to improve for next time?) as part of the project.

My goal is to both reinforce topics we're learning in class, but to also help them practice skills they would use in their daily lives as a software developer: Being professional in reviewing code and receiving critique, collaborating together, learning their way around a codebase with many "moving parts", reading and writing documentation.

~

How does this thwart cheaters?

With a lot of traditional programming assignments, the requirements for the entire program are written as part of the assignment documentation. By feeding this into a Generative AI, it can somewhat complete the assignment, as it has all of the requirements.

Or, if not using GenAI, a student might have someone else help them with the assignment. Another student might be proficient enough to read a few pages of assignment documentation and write the solution from scratch.

With the codebase project, I've found that in some cases the work certain students turn in is downright nonsensical, a sign that a student relying on outside resources isn't sure how to tackle a codebase with hundreds of files.

I can only imagine a student asking another person for help and their would-be helper deciding that learning a codebase just to help a friend cheat would be a huge waste of their time. With a GenAI, without the context of the entire codebase, what code would they paste in? Even if they give a summary of the ticket and the general location of the code, the GenAI won't have the context of all the other classes and functions that will be required for the program. And while a student might be able to find and copy/paste that code as well, if a student understood how to navigate the codebase themself, they may not feel the need to rely on GenAI to get their work done.

Additionally, using a codebase for projects helps me cut down on potential plagiarism because it means I have to make new tickets each semester, as each semester the codebase grows and has more features. ~

Nonsensical merge requests

Here is an example of some nonsensical merge requests I've seen over this and last semester, to help better illustrate what gets turned in when a student that is over-reliant on outside help attempts this work.

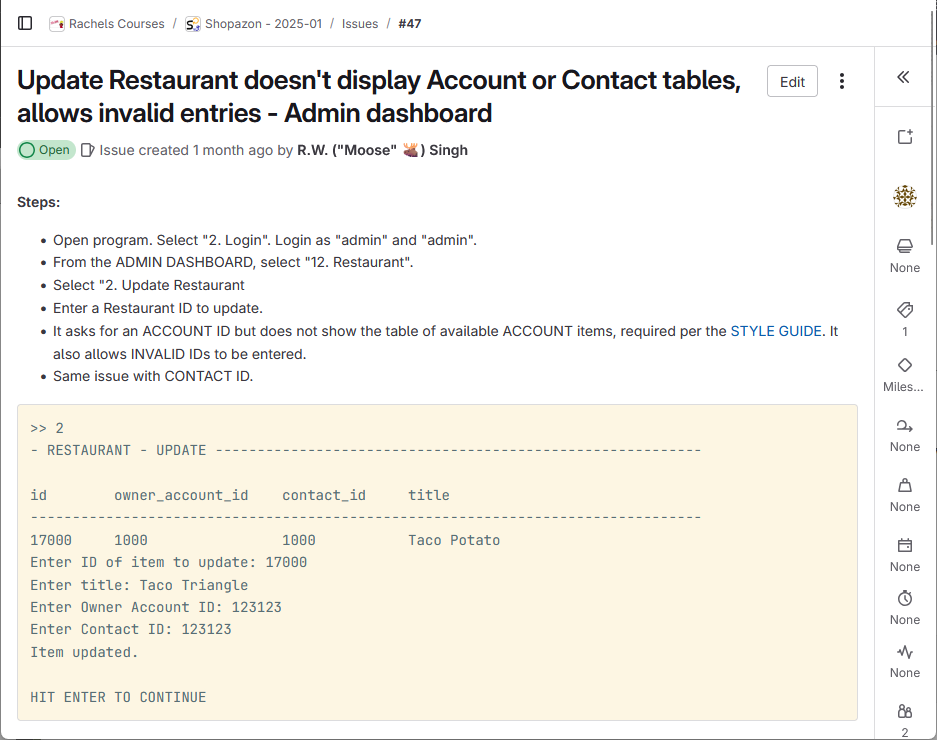

1. Update Restaurant doesn't display Account or Contact tables, allows invalid entries - Admin dashboard

For this bug fix ticket, reproduction steps were outlined in the ticket, and during class I gave a demo of using the Shopazon application and locations in the code that handle these menus and the Models and Model Managers they will be working with.

The merge request was reviewed by another student before I got around to grading the projects, and they did a good job of summarizing what was wrong with the other student's changes in the code:

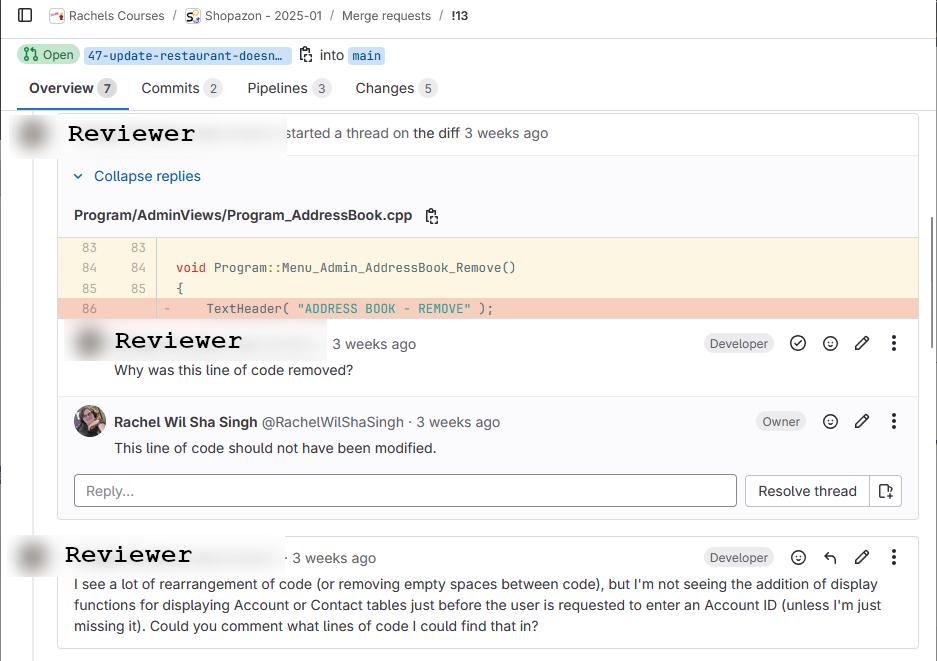

Why was this line of code removed?

I see a lot of rearrangement of code (or removing empty spaces between code), but I'm not seeing the addition of display functions for displaying Account or Contact tables just before the user is requested to enter an Account ID (unless I'm just missing it). Could you comment what lines of code I could find that in?

~

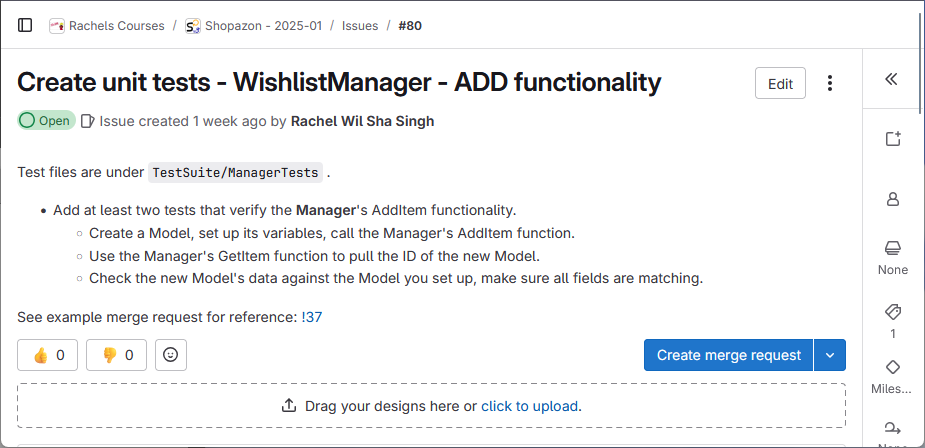

2. Create unit tests - WishlistManager - ADD functionality

For this ticket the requirements were to write two unit tests to verify that a model's Manager class added a new model item to the list, and that all data was unchanged during the Add/Get process.

I also provided a link to a merge request that I created, where I implemented a unit test for the AccountManager and Account classes. When working on features in the real world, often there's already some models that have been created and have code written for data access and manipulation, so it can be common to try to use code from elseware as reference.

With this student merge request, however, they ended up removing the empty function I had written (where they should have written their code), and then pasted the code from my AccountManager merge request in and submitted that.

~

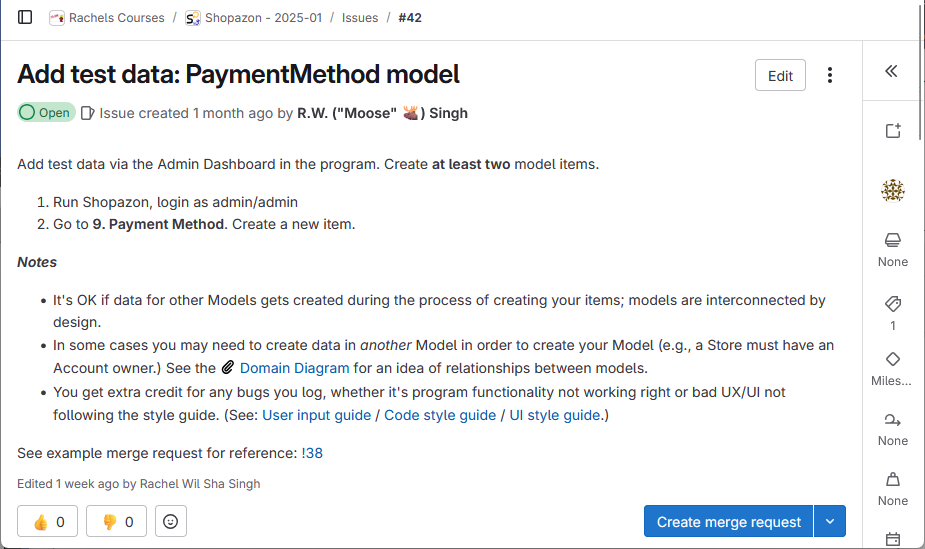

3. Add test data: PaymentMethod model

For this ticket students are instructed to just run the Shopazon program and log in as an admin, then use the admin menu to create a new record in the "database" (it's just a bunch of CSV files). In this case, no coding is required, just running the program and creating some new Accounts or Products or Restaurants, etc.

In the student's submitted merge request, they:

- Updated a test stub relating to the PaymentMethodManager.

- Called Manager functions and used variables that do not exist:

- Incorrect:

manager.addPaymentMethod(method); Correct:

PaymentMethod item; /* [...item setup...] */ PaymentMethodManager::AddItem();

Except this isn't even correct because they weren't supposed to change any code, just run the program.

- Incorrect:

- For some reason, also edited my Makefile.

I've had project submissions from several students where it seems as if they're trying to randomly add code in hopes of getting partial-credit, as nonsensical as their updates are. Usually, these are also students who have not been turning in weekly assignments for large chunks of the semester and try to play "catch-up" with the larger-point assignments.

~

Unique tickets each semester

Another way I reduce the ability to plagiarize for the semester programming projects is by writing new tickets each semester. Even if a student makes a copy of the codebase and posts it somewhere else for other students to "copy", the codebase is different each semester, in that it evolves with the new tickets I write and the features students implement.

I've tried to get all my labs to a complete state so I can focus more on exams and projects over the weekly learning assignments, which are so small it would be hard to really detect cheating since a lot of code is my starter code and they only write little bits for practice, then a slightly larger program as the graded part of the lab.

By making labs not a huge part of the grade, it can be okay for me to miss plagiarism there. And with that mental and time load freed up, I can focus that on the projects instead.

The first sprint of a semester usually includes bug fixes, then for the second, third, and fourth sprint I figure out a design to integrate something from class (e.g., Templates, Exceptions, Polymorphism, etc.) into the codebase as new features.

As the codebase has 24 Models, a lot of these features per sprint can be "variations on a theme". Each Model is unique, but throughout the codebase we'll be adding, removing, updating, and accessing each one.

New bugs for next semester :)

Something I enjoy about preserving the student-written code each semester is making the codebase "realistic" because there is a variety of code quality now in the codebase. (Note: I make a copy of the codebase each semester, so students aren't seeing previous semester student names.)

Some students might have written a solution that works but is a bit roundabout, or some students might have introduced a bug while implementing code. This often means I have a new batch of bugs I can use as the first sprint tasks for students in the following semester.

~

Of course, students might still cheat

It is still possible for students to cheat in some way, though for the projects, which model a real-world software development process, I don't consider brainstorming with classmates to be cheating. I express to my students that I don't want them to be plagiarizing - as in, passing in someone (or something) else's code as their own work.

Some of the techniques I have for guarding against GenAI usage can catch cheaters who are not very adept at it; who just want something else to do their homework so they don't have to.

In my mind, the more skilled a student is at figuring out how to effectively use their tools to complete their work, they're more likely to probably see the purpose of actually just doing their work themself.

For example I could probably get GenAI to give me reasonable code for the Shopazon codebase, but by knowing enough to direct it properly, to give it the information it needs to complete the task, I would have to know (or be able to analyze) the codebase well enough, and at that point I may as well write the code myself.

My other techniques for catching GenAI use on exams and other assignments could be gotten around by a student just being thorough in reviewing the assignment and their own work, but there are certainly students who haphazardly throw their assignments into GenAI and copy/paste what it gives back, probably in five seconds.